What is Data and AI Impact Management?

AI is no longer a side project or a research experiment. It has become the centerpiece of every enterprise ambition. It now shapes board agendas, dominates investor conversations, and sits at the heart of corporate strategy. In sectors from finance to manufacturing, from healthcare to retail, AI is now considered a core capability for competitive advantage.

The investment signals are undeniable. Gartner projects global generative AI (GenAI) spending will total $644 billion in 2025, up 76.4% year over year. Meanwhile, IDC forecasts worldwide AI spending will reach $632 billion by 2028, with generative AI accounting for a significant share. Deloitte reports that 79% of executives expect AI to transform their organizations within three years.

But the results tell a different story. Despite billions in investment, enterprises are struggling to turn experimentation into measurable business value. Globally, 71%of CFOs admit they still struggle to monetize AI effectively. A McKinsey survey found that just 10% of organizations systematically track the business value of their AI use cases. Arecent MIT Media Lab (Project NANDA) study, The GenAI Divide: State of AI in Business 2025, finds that roughly 95%of GenAI pilots are failing to deliver measurable returns – most remain stuck at proof-of-concept with little or no P&L impact. And, according to PwC, fewer than one in three executives believe their organization can effectively quantify AI’s business impact.

This contradiction – soaring investment, limited evidence of value – has been called the AI performance paradox. For all the promise of AI, its value in most enterprises remains a story of anticipation rather than realization. It’s not just an operational headache but also a strategic risk. Boards and CFOs are increasingly demanding clear evidence of value.

Without a fundamental shift in how AI is managed, the gap between ambition and outcomes will continue to widen.

Lessons from past technology waves

We’ve been here before. Although the current surge in AI is unprecedented in scale and visibility, the underlying pattern is not new. Each major wave of enterprise technology has followed a similar trajectory: initial hype, large-scale investment, and then the hard reality that value creation requires more than enthusiasm and investment.

The “big data” boom a decade ago is a case in point. Organizations stood up massive data lakes and sophisticated analytics stacks, believing that collecting more data would automatically translate into a competitive edge. But, in practice, only a minority managed to scale analytics in ways that produced measurable business impact and most were left with vast repositories of unused or underutilized data.

Early machine learning projects faced similar issues. Many achieved impressive technical accuracy in lab settings, but failed to deliver business benefits once deployed. Technical success rarely translated into operational change, leaving many projects abandoned after the proof-of-concept stage.

GenAI raises the stakes even further. It’s easier to experiment with, faster to prototype, and far more visible to senior leadership than past technologies. That compresses the timeline between hype and expectation. The MIT Media Lab study underscores this: organizations are running hundreds of pilots but finding few scalable returns.

The recurring message is clear: technology alone does not create impact. Without a discipline for managing value, even the most promising technologies underdeliver.

Also read: The Harvest After the Hype: AI’s Reality Check and What Comes Next

Why this isn’t about technology limitations

Earlier technology waves often faltered because the underlying tools and infrastructure were not mature enough to support large-scale deployment. AI today is different.

From autonomous workflows to predictive analytics, these systems are production-ready. They are being embedded directly into business processes, reshaping how work is done. Blaming the technology for underperformance is no longer credible.

The challenge lies elsewhere: organizations have not developed the ability to systematically connect AI delivery with measurable business outcomes. The breadth of possible use cases creates the illusion of inevitable value. But potential does not equal realized impact.

Without a discipline that ensures AI initiatives are tied to strategic objectives and measured rigorously, enterprises risk confusing activity with progress.

Why existing paradigms break down

In many organizations, the lack of visible AI impact is acknowledged by executives, but not treated as an urgent or unsolved problem.

Instead, the assumption is that existing tools and governance practices are good enough. But when you take a closer look, those tools were designed for different purposes, and aren’t good enough for the challenges at hand.

Tech teams often respond to new challenges by requesting more tools. If data is siloed, the answer is a catalog. If pipelines are unstable, it's an observability platform. If machine learning use cases multiply, deploy an MLOps platform.

Executives have often approved these additions, only to realize later that the promised business impact never fully materialized. It’s no surprise, then, that many leaders now hesitate before expanding the stack even further.

Still, the tech teams aren’t wrong. Each of these investments indeed solves a real operational problem. But the catch is that none of them tells you what’s actually working. These tools optimize for operability, not necessarily for outcomes. They improve execution efficiency, but say nothing about whether what’s shipped is delivering business value.

On the other hand, business leaders rely on slide decks, dashboards, and spreadsheets to report progress. While these may create the appearance of progress, they turn impact into a narrative artifact rather than a managed discipline. In practice, this means business stakeholders often end up managing perception, not performance. Impact becomes opinion.

The main issue is the absence of a shared frame for value. Business and technical teams speak different languages, optimize for different metrics, and operate on different timelines. Few organizations have established the kind of system-level discipline that can bring these worlds together in an effective way.

The missing strategic management layer

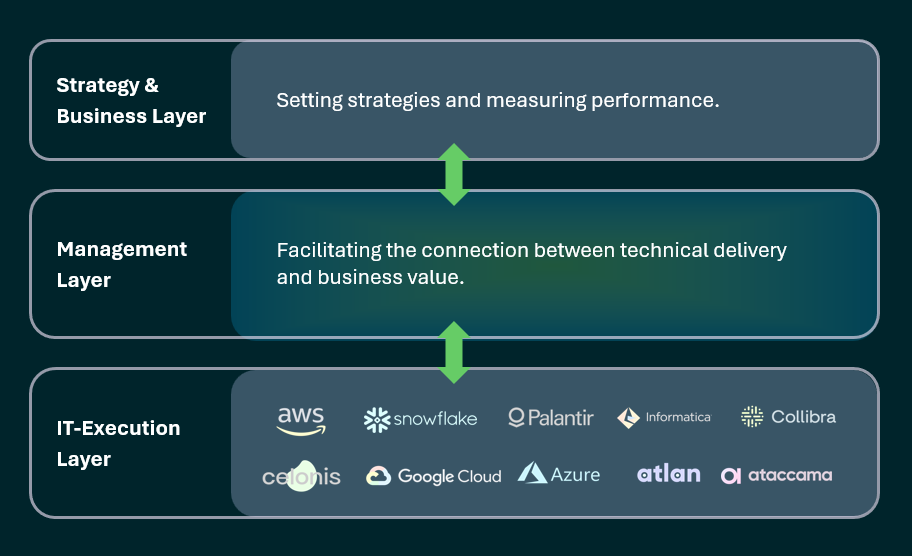

AI currently operates in two disconnected layers. At the IT execution layer, data pipelines are built, models are trained, and infrastructure is maintained. At the strategy and business layer, strategies are set, budgets allocated, and performance is measured in terms of financial or operational KPIs.

Between these two layers lies the missing link: the strategic management layer that actively connects technical delivery with business value.

In other enterprise contexts, this “middle layer” is well established. Product management links engineering output to customer outcomes. Financial management links capital allocation to returns. But in data and AI, no such connective tissue exists.

The result is predictable: no shared language of value, impact measurement that is anecdotal at best, and initiatives that drift from strategy or duplicate efforts elsewhere. Scaling successful use cases becomes a matter of chance rather than a repeatable process.

What’s needed is a bridge between business and technology through structured visibility, shared accountability, and measurable outcomes.

The root causes beneath the surface

Before defining a solution, it’s worth confronting the structural issues that prevent AI from delivering on its promise.

Not just at the surface where the symptoms show up, but at the structural level, where the real issues hide. Until that’s confronted, the gap between AI ambition and enterprise reality will only widen.

1. No clear view of what AI is doing or whether it’s working

Most enterprises can’t say, with confidence, which AI initiatives are driving actual business results. There’s a disconnect between model metrics and business metrics. AI teams talk about precision and recall; business leader scare about revenue, risk, and efficiency. These two worlds rarely meet in the middle.

2. Fragmented, duplicative efforts with no shared map

AI initiatives are often launched in silos. By innovation teams, analytics groups, or embedded data units inside functions. They don’t know what others are working on. There’s no common inventory, no coordination, and no mechanism to spot overlap. As a result, the same kinds of use cases get built over and over, by different teams, in slightly different ways.

3. Unclear ownership, no accountability for outcomes

Too often, AI initiatives are handed off without accountability. A model gets developed, it gets “delivered,” and then responsibility disappears. No one ison the hook for whether it drove business impact or even got adopted.

4. Impact doesn’t get measured, so value doesn’t get proven

Even when initiatives succeed, there’s rarely a reliable way to quantify the result. Was it worth the investment? Did it move a business metric that matters? Without a way to capture that, successes don’t scale and leaders hesitate to fund new projects.

5. AI becomes a cost center, not a value engine

Instead of being treated as a strategic asset, AI will continue on the same path as its predecessors, data science or machine learning. It will become a cost center. It will look like just another line item – experiments with unclear payoff, teams asking for headcount, and outputs that never make it into production. Over time, this erodes trust from executives, finance leaders, and end users alike.

A new discipline: Data and AI Impact Management

A dedicated management discipline can counter this. Leaders need to actively manage value contribution to the organization with “Data and AI Impact Management”.

Data and AI Impact Management describes a management approach that turns business chances and opportunities into tangible outcomes by leveraging data and AI. It actively manages business value from the initial use case idea through to scaling and operations. By strategically governing and systematically executing the process of generating outcomes and managing value, the impact from data and AI becomes clear, measurable, and demonstrable.

At its core, Data and AI Impact Management is a way to make outcomes non-negotiable. It pairs a product mindset – outcomes over outputs – with value management – qualification, measurement, and tracking– so every initiative has a clearly defined value potential and the evidence to prove (or disprove) it in production.

Practically, it turns AI from a collection of initiatives into a managed portfolio tied to the operating plan, where results compound rather than reset.

Why this matters now

Three factors make Data and AI Impact Management something that organizations can’t afford to ignore:

- Compressed timelines: GenAI adoption has accelerated expectations, leaving less time for trial-and-error.

- Decentralized AI adoption: AI capabilities are being embedded into business units, making centralized visibility harder without a shared management layer

- Regulatory pressures: Governance structures will also significantly contribute to mitigating risks and complying with the EU AI Act and emerging regulations.

The pressure is on for data and AI leaders

No group feels this challenge more acutely than the leaders and teams charged with turning AI ambition into enterprise reality. They are expected to push the boundaries of innovation while delivering short-term results that justify continued investment. The runway for proving value is short. The scrutiny from executives and investors is intense.

When the impact of data and AI teams remains invisible, it’s not just their work that gets questioned. It’s their credibility that’s on the line. In the enterprise arena, expectations are high and patience is in short supply.

Building the discipline

Like product or financial management, Data and AI Impact Management is not a tool but a cross-functional discipline. It brings together three elements:

- People: aligned roles across data, AI, and business teams.

- Processes: standard methods for prioritization, modeling, measurement, and iteration.

- Platforms: integrated systems for visibility, tracking, and decision support.

Some organizations will begin with the basics – an inventory of initiatives, standardized descriptions, and simple impact tracking. Others will move quickly to portfolio-level management. The journey will differ, but the principle is the same: impact over activity.

From experimentation to evidence

The defining shift of this AI era will be from building AI to proving AI works – economically, operationally, and strategically.

Data and AI Impact Management provides the missing strategic management layer that connects technical execution with business outcomes. Organizations that adopt it will move from AI experimentation to AI as a managed portfolio of value-generating assets where every initiative is accountable, measurable, and strategically aligned.

Those that master this discipline will not only justify their AI spend – they will turn it into a repeatable engine for growth.